Bayesian or Frequentist: Choosing your statistical approach

When selecting a statistical approach for your experiments, you’ll likely encounter the long-standing debate between Bayesian and frequentist statistics. Each philosophy offers a distinct perspective on probability and uncertainty, influencing how data is interpreted and decisions are made. The choice between these approaches often depends on several factors: the research question, the availability of prior information, computational resources, personal preferences, and the standard practices within a given organization.

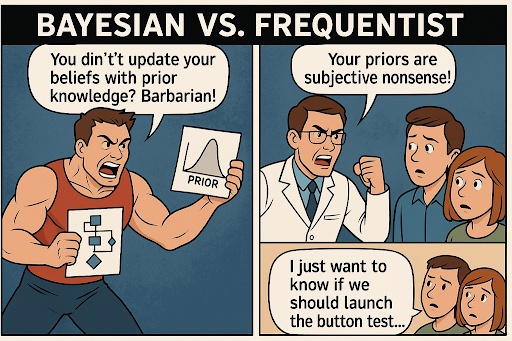

In practice, many statisticians hold strong views favoring one approach over the other, sometimes leading to passionate debates—each side convinced of its superiority. Nevertheless, a growing number of practitioners recognize the value in understanding both frameworks and advocate for using the one best suited to the task at hand. In the sections that follow, we’ll briefly introduce each approach, explore their advantages and limitations, and highlight key differences to help you make informed decisions in your own work.

The Frequentist approach

The roots of frequentist statistics trace back to the early 20th century, with influential work by Ronald Fisher, Jerzy Neyman, and Egon Pearson. They developed key concepts like hypothesis testing, p-values, and confidence intervals, which became the dominant paradigm in various fields.

Frequentist statistics interprets probability as the long-run frequency of events across repeated trials, treating parameters as fixed but unknown constants. This approach focuses on evaluating the likelihood of observed data under a given hypothesis—typically the null hypothesis, which assumes there is no effect or difference. Tools like p-values are used to assess the strength of evidence against the null, while confidence intervals estimate the range of plausible values for the true parameter. Core frequentist methods—such as hypothesis testing and confidence intervals—are widely used in experimentation to draw inferences about population parameters based on sample data.

One strength of frequentist approaches is their objectivity: prior beliefs of the researcher about the parameter are incorporated into the test in any way. Frequentist methods provide a standardized way to assess the significance of results. This has led to their widespread acceptance in many fields, including scientific research and industry.

However, frequentist methods also have limitations. P-values and significance testing are often misinterpreted. For example, people may think that a small p-value imply a large or practically meaningful effect. Conversely, a non-significant result does not prove the null hypothesis is true.

Another issue is the dichotomization of results into "significant" or "non-significant" based on an arbitrary threshold (usually 0.05). This can lead to overemphasis on p-values rather than effect sizes and practical significance. It's important to consider the context and magnitude of effects, not just statistical significance.

Frequentist methods can also be sensitive to issues like early stopping and multiple comparisons. In frequentist methods, repeatedly checking data or conducting many tests increases the risk of false positives, potentially leading to misleading conclusions. While these issues are sometimes overlooked in practice, there are well-established techniques to address them. For example, correcting for multiple comparisons—though it may reduce statistical power—helps control the overall false positive rate. Alternatively, sequential testing methods allow for continuous monitoring of data while maintaining statistical validity, offering a more flexible approach when interim analyses are needed.

Despite these limitations, frequentist approaches remain popular in practice. They offer a straightforward way to test hypotheses and quantify uncertainty. When used appropriately, with an understanding of their assumptions and limitations, frequentist methods can be valuable tools for data analysis and decision-making.

The Bayesian approach

Bayesian statistics, named after Thomas Bayes, gained momentum in the mid-20th century with the rise of computing power. Key figures such as Bruno de Finetti and Leonard Savage helped formalize the concepts of subjective probability and decision theory, establishing the foundation for modern Bayesian methods. Today, Bayesian techniques are applied across a wide range of fields. In clinical trials, Bayesian adaptive designs allow studies to stop early for efficacy or futility, improving efficiency and ethical oversight. In machine learning, Bayesian optimization is used to tune hyperparameters more efficiently than traditional methods like grid search. Additionally, Bayesian networks—a type of probabilistic graphical model—are widely used for inference and decision-making under uncertainty.

Unlike frequentist statistics, which treats parameters as fixed but unknown, Bayesian statistics views parameters as random variables with associated probability distributions. Prior beliefs about these parameters are updated with observed data to produce posterior distributions. This approach allows for direct computation of the probability of a hypothesis given the data, making interpretations more intuitive and enabling the incorporation of prior knowledge into the analysis.

Bayesian methods offer several advantages over frequentist approaches. One of the most notable is the intuitive interpretation of results—Bayesian inference provides direct probabilities for hypotheses, such as the likelihood that a treatment is effective. Thus, unlike frequentist methods, Bayesian approaches make it possible to assess the probability that the null hypothesis is true, rather than simply rejecting or failing to reject it. It also allows researchers to incorporate prior knowledge or expert beliefs into the analysis, which can guide conclusions, especially when data is limited. Finally, Bayesian methods are well-suited for scenarios involving continuous data monitoring.

However, the ability to incorporate prior knowledge—while a strength of the Bayesian approach—can also be its Achilles’ heel. Choosing an appropriate prior distribution is often challenging and may introduce bias if influenced by subjective beliefs or poorly justified assumptions. In addition, Bayesian methods can be computationally demanding. Calculating the posterior distribution typically involves complex integrals that are not analytically solvable, requiring approximation techniques such as Markov Chain Monte Carlo (MCMC), which can be time-consuming and technically complex to implement.

Comparing Frequentist and Bayesian approaches in real-world scenarios

The debate between Bayesian and frequentist statistics has long been a contentious one. Frequentists often criticize the subjectivity involved in choosing priors in Bayesian inference, arguing that it can introduce bias. On the other hand, Bayesians contend that frequentist tools—such as p-values—are frequently misunderstood and misused, leading to flawed conclusions.

At the core of the disagreement lie fundamental differences in how each approach treats probability and uncertainty. Bayesian methods use probability to quantify uncertainty in parameters, treating parameters as random variables with distributions. In contrast, frequentists treat parameters as fixed, unknown quantities and apply probability only to data arising from repeated sampling. Bayesian inference combines prior beliefs with observed data to form posterior distributions, while frequentist inference relies solely on the data at hand. This allows Bayesians to assign probabilities directly to hypotheses, whereas frequentist methods focus on rejecting or failing to reject hypotheses based on significance tests.

Despite these philosophical differences, the distinction between the two approaches is not always clear-cut in practice. Many modern methods, such as empirical Bayes techniques and regularization in machine learning, blur the line by incorporating elements of both frameworks. In fact, when using uninformative or "flat" priors, Bayesian and frequentist methods often yield remarkably similar results. Ultimately, both Bayesian and frequentist statistics are valuable tools in the analyst’s toolbox. Understanding their respective strengths and limitations is crucial for effective data analysis. This convergence highlights that the choice between the two is not always about right versus wrong, but about which approach best fits the context.

For example, in experiments with large sample sizes and well-defined hypotheses, frequentist methods such as t-tests and ANOVA are often preferred. They are robust and grounded in well-established theoretical principles like the central limit theorem and the law of large numbers. These tools are especially effective when prior information is scarce or not reliable.

Conversely, Bayesian methods shine in situations with small sample sizes or when prior knowledge is available and valuable. By updating prior beliefs with new data, Bayesian inference supports iterative experimentation and decision-making, making it particularly well-suited for adaptive designs and real-time analytics. For example, in time-sensitive scenarios where quick decisions must be made with limited data, a Bayesian approach with informative priors can yield more actionable insights than a frequentist method that depends solely on the data collected so far. Thanks to advances in computational power, Bayesian methods—once considered too complex for routine use—are now widely accessible, complementing classical frequentist techniques.

Practical considerations in choosing the statistical approach

In real-world applications, the choice between frequentist and Bayesian approaches depends on several practical factors:

The nature and size of the dataset: Bayesian methods often perform better with small sample sizes, especially when prior information is available. In contrast, frequentist approaches generally require larger samples for stable and reliable estimates.

The complexity of the hypotheses being tested: More complex models or adaptive experimental designs may benefit from the flexibility and modularity of Bayesian methods.

The availability and reliability of prior knowledge: When strong, well-justified priors exist, Bayesian methods can enhance inference and support better decision-making. However, in the absence of informative priors—or when objectivity is a concern—frequentist methods may be more appropriate.

The specific goals, risks, and constraints of the experiment: Bayesian methods are well-suited for real-time decision-making, adaptive testing, and belief updating. Frequentist approaches remain the gold standard for formal hypothesis testing and confidence interval estimation.

The characteristics and expertise of your team: When scaling experimentation, consider the team's familiarity with each framework. Frequentist methods are often more intuitive and accessible to non-statisticians, while Bayesian approaches may require more advanced statistical knowledge and computational resources.

Fortunately, a wide range of statistical software supports both Bayesian and frequentist analyses, making these methods increasingly accessible:

R: bayesAB, bayestestR, rstan

Python: pymc3, scipy.stats

SAS: PROC MCMC, PROC GENMOD

SPSS: Bayesian Statistics module

By carefully considering these factors—along with the tools and resources available—practitioners can make well-informed decisions about which statistical framework to use. Whether you choose a frequentist, Bayesian, or hybrid approach, the key is to align your method with the goals of your experiment, the structure of your data, and your team's capabilities.

At Statsig, we believe it’s more productive to view Bayesian and frequentist methods not as opposing philosophies, but as complementary perspectives. That’s why we support tools and methods from both approaches—so you can apply the right technique for the right context, ultimately enhancing the quality, reliability, and impact of your analysis.

Request a demo