Product Updates

🔐 OAuth Support for Statsig MCP Server

We’ve upgraded authentication for the Statsig MCP Server to support OAuth — supplementing the previous key-based authentication flow. This brings a more secure, scalable, and standards-aligned approach to connecting your MCP tooling with Statsig.

Why this matters

OAuth makes it easier and safer for teams to integrate the Statsig MCP Server with their development workflows and in more tools. It enables clearer permission boundaries, smoother onboarding and persistent sessions, and better alignment with modern enterprise security practices.

Getting started

Follow the updated setup instructions in our docs to enable OAuth for your MCP Server connection. No changes are required to your existing Statsig feature flags or experimentation setup — just update your authentication method to take advantage of the new flow.

Learn more in the docs here: https://docs.statsig.com/integrations/mcp

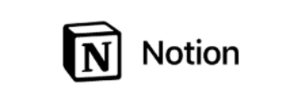

🕵 Traces Explorer now in Beta

Traces show how a single request moves through your system, one step at a time. Statsig new lets you explore those spans alongside experiments and feature rollouts.

Traces Explorer = faster root cause. You no longer need to jump between tools to understand slow or failing requests. Statsig brings traces, logs, metrics, and alerting into one place for critical launches.

Bring observability into your product decision loop.

Traces Explorer (Beta) is available for Cloud customers. View trace setup instructions here.

🔌 Reverse Power

What is Reverse Power?

It shows the smallest effect size your test had power to detect based on actual sample size and standard error of the control group (it does not depend on the observed effect size of the experiment). It’s a great tool to leverage retrospectively near the end of an experiment to make more informed decisions.

How is it used?

Helps you reflect on what your experiment actually could detect

Helps you with iteration decisions like extending the experiment, rerunning with greater sample, or re-evaluating the experiment design

Getting started

Toggle it on/off anytime in Settings → Product Configuration → Experimentation. To learn more about Reverse Power see our docs here.

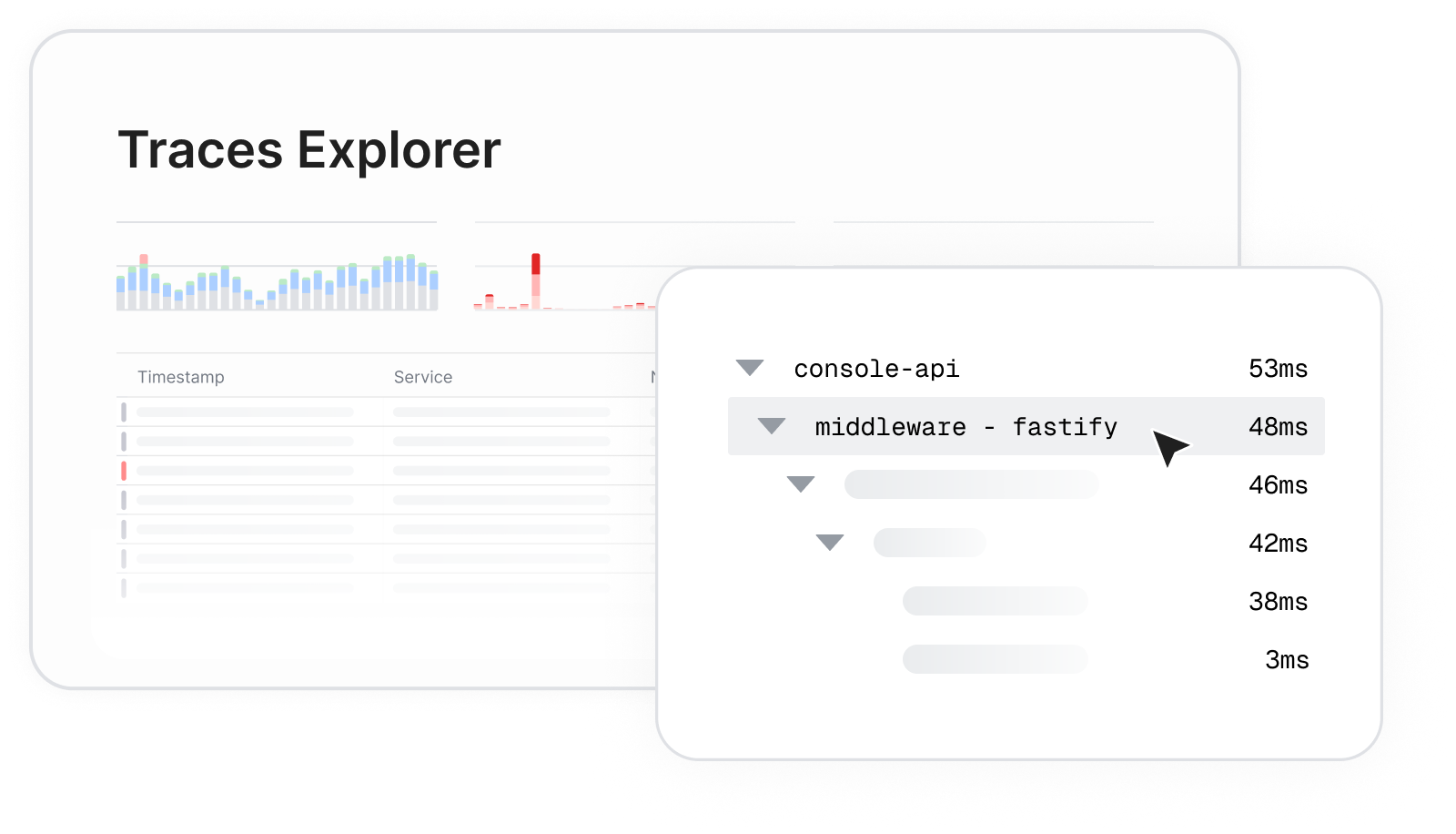

🕳️ Results in Lower Environment

For our Cloud users, we’ve added support for viewing cumulative exposure and metric results for experiments enabled in lower environments. Helping users catch issues early and ship experiments with confidence.

This makes it easier to verify that:

Users are being bucketed correctly

Your metrics are logging as expected

To enable this, go to the experiment setup page, select Enable for Environments, run tests in your lower environment, and view real exposure and metric data before launching to production.

For more information on the new lower environment testing features see the docs here.

🚨 Topline Alerts on Metrics

Topline Alerts work best when they track the exact metric your team relies on. Until now, Topline Alerts could only be created using events, which meant rebuilding the metric logic each time. This increases the potential of duplicated definitions, drift over time, and confusion about what the “real” definition should be defined as.

This update fixes that. You can now create Topline Alerts using existing metrics in your Metrics Catalog. The alert uses the metric’s definition exactly as it is, so everything stays consistent and aligned. No rewriting. No re-creating logic. No guessing which version is correct.

It keeps your metrics clean, keeps ownership clear, and removes the risk of definitions drifting as teams grow.

🍋 Metric Source Freshness

Statsig WHN users can now quickly check the freshness of their Experiment Metrics Source data directly from the experiment results page. The data displays the most recent timestamp found in a Metric Source during results computation, helping users identify potential issues in their data pipeline that could delay results calculation.

🧏♀️ Recent Queries in Logs Explorer

Debugging usually means retracing your steps. You try something, flip tabs, run a new search, and then can’t remember the exact query that actually pointed you in the right direction. It breaks your flow and slows everything down.

Recent Queries fixes that. Logs Explorer now shows your last five searches right in the search menu. No more digging through tabs or guessing what you ran before. Jump back into earlier investigation paths, compare results in seconds, and pick up your workflow without losing momentum.

A small change with a big payoff for staying in the zone.

🏡 Metric Family

Managing a large metric catalog can be challenging—especially when many metrics are slight variants of a single source metric. Ensuring that updates to the main metric cascade correctly to all of its variants can be difficult and error-prone.

For our WHN customers, we’re excited to introduce Metric Families. Users can now create Child Metrics (variants) from a Parent Metric, ensuring that any changes to the Parent automatically flow down to its Children. This makes it easier to manage large catalogs while giving teams the flexibility to create and maintain metric variants without losing consistency.

The feature is available for Sum and Count metric types. Follow this link to learn more.

🧾 Experiment Results Table View

Today we're excited to announce Table View for experiment results. It's perfect for users who want to examine their experiment results in greater detail while keeping everything consolidated in a single view.

With Table View, users can now see Control Mean, Test Mean, and P-value—in addition to the data points available in the default cumulative view.

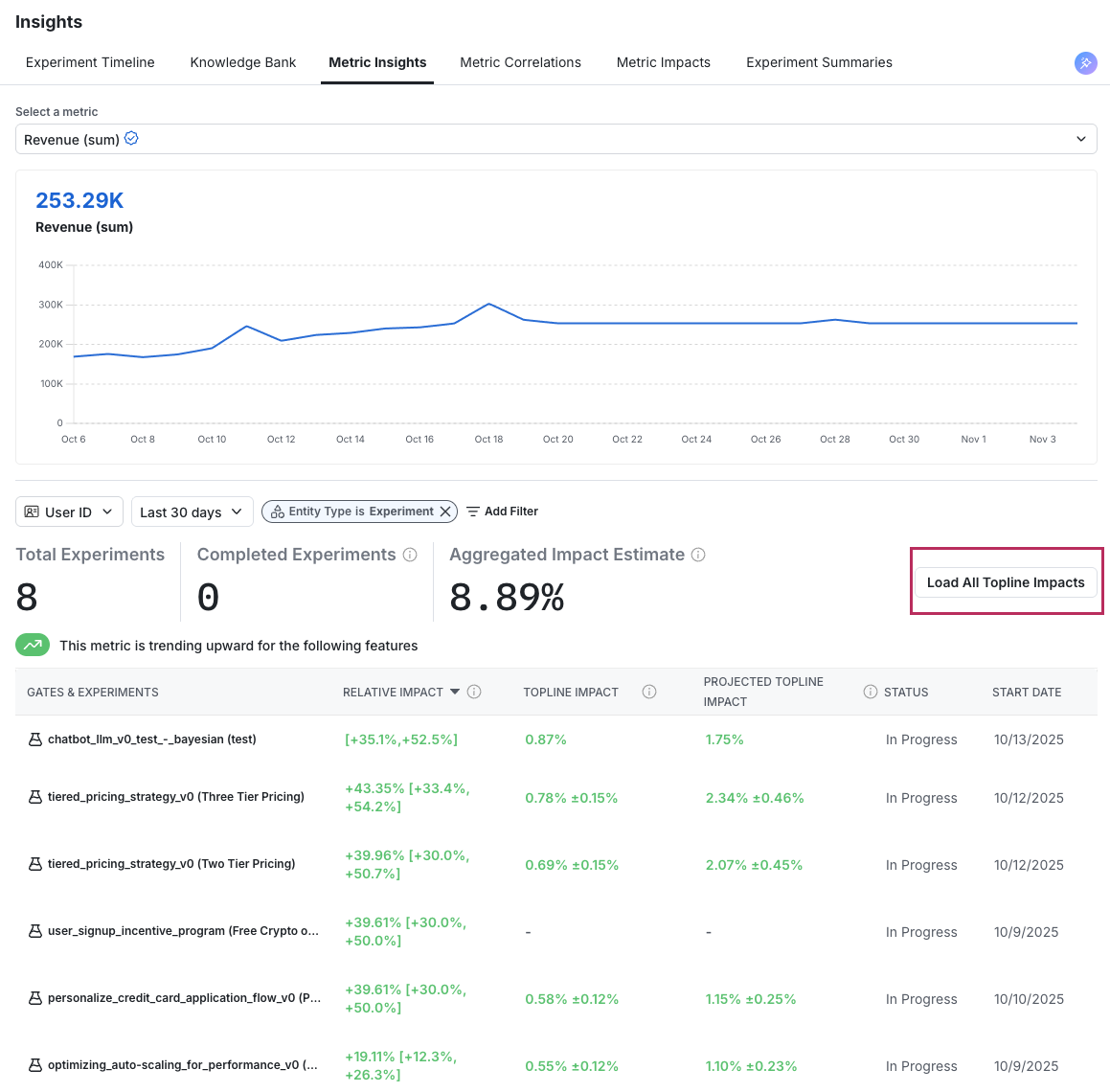

📚 Bulk Topline Impact Calculation

Many users want to understand how the metric changes measured in their experiment translate to shifts in the overall metric (topline impact) or project the expected impact if the variant were rolled out to the broader population (projected topline impact).

For our WHN customers, calculating Topline impact just got easier with the launch of our bulk upload feature. Users can now update this data centrally from the Experiment Insights Page, making it easier than ever to measure the aggregate impact of experiments on key business metrics.

Loved by customers at every stage of growth