In the tech world, an increasing number of product teams are embracing experimentation, often referred to as A/B testing.

A/B testing serves to continually enhance product experiences and foster innovation. This shift signifies a departure from relying solely on intuition, guesswork, or a limited set of data points.

Product teams, regardless of size, are adopting a more rigorous approach to refine their products. Many companies—using in-house tools or third-party solutions like Statsig—are striving to embed a culture of experimentation into their organizational DNA.

Surprisingly, only a relatively small number of designers are actively engaged in the experimentation workflow, or skilled at using experimentation to bolster their confidence in delivering design solutions to users.

In my personal experiences, I've encountered designers who have either never participated in an A/B test, or struggle to grasp how they can leverage experimentation to effectively try out their ideas. Some have even shared experiment examples without realizing that their approach could lead to potentially misleading interpretations due to less-than-ideal setups, which was an eye-opening realization.

During my time at Facebook, I was deeply immersed in a suite of advanced internal tools that facilitated feature deployment and experiments, aiding teams in learning from their launches. Each launch was encapsulated within a feature flag, and numerous experiments were conducted frequently.

The team I was part of held weekly reviews to evaluate feature performance against key metrics based on hypotheses, driving informed decisions for subsequent steps for the team to action on. Importantly, designers were actively involved in the entire process, from inception to completion.

Observing the effectiveness of a data-driven process at such a scale and witnessing how products evolved over time reinforced my conviction that experimentation should be a cornerstone of a designer's toolkit—seamlessly integrated into the design process.

You might be wondering:

“What are the essential aspects designers need to understand about experimentation?”

“If my team plans to conduct experiments and seeks my input to design novel user experiences, what steps should I take?”

“How can I harness the power of experimentation for new initiatives?”

“How can I enhance my skills in experimenting with fresh ideas that could lead to something great inside the product?”

Taking a practical approach, I'd like to begin by emphasizing that designers don't need to be well-versed in the complex terminology and methodologies of experimentation familiar to engineers and data scientists.

Additionally, designers don't need to know how to set up experiments themselves or even decide which metrics to track (although it's wise to be deliberate about which key metrics you aim to impact through your design explorations).

Generally, the technical aspects can be left to cross-functional colleagues, unless designers are willing to step beyond their comfort zones to gain a broader understanding.

The "why" and "how" before before diving into pixels

The role designers play in the experimentation process might seem simple: crafting UI/UX for each variation to be tested.

While this is true, a more thoughtful approach is imperative when using experimentation, even before translating ideas into tangible designs. It begins with asking a series of questions, serving as a solid foundation for approaching experimentation as a tool.

Generally speaking, designers should possess the ability to critically address a given problem and understand how running experiments could assist in resolving it. It's important to note that experiments don't directly solve problems; instead, they offer insights and knowledge that can be integrated into new product experiences after iterations are applied.

When conducting design experiments, it’s the responsibility of the designer to consider the following:

What is the problem?

Why are we addressing this particular problem?

Why is this problem worth solving?

Why is experimentation suitable for this situation?

How will we use experimentation to solve this problem?

How many design variations should we test and what are we trying to learn from them?

How well are the design variations justified by our understanding of the supporting data points (if there is any) or the hypothesis that we formed?

How much investment, in terms of design and engineering cost, do we consider appropriate for these design variations? Would there be an opportunity to still learn with minimal cost?

How can we learn from these design variations? What success metrics are we tracking? What metrics should we set as guardrails?

How are we planning to iterate based on our findings?

Sometimes, designers will find themselves excluded from the early discussions or context that other cross-functional team members have been a part of.

While this isn’t the optimal workflow, designers should probe for contextual nuances surrounding the project before delving into design details. Furthermore, as experts in product development, designers should participate actively in the ideation process.

These initial stages of experimentation provide ample opportunities for designers to contribute valuable insights and establish directions for exploring user experiences.

Introducing Product Analytics

The next steps

As the answers to the aforementioned questions shape up, team members will gradually align and begin generating ideas for design variations.

At this point, designers play a pivotal role: They understand the design system inside and out, can advocate for users and envision user journeys, and boast deep product expertise. As such, designers should be empowered to express their opinions—both in agreement and dissent—regarding proposals put forth by team members during discussions.

More significantly, this is an opportunity for designers to present and explore their perspectives.

Designers should not solely rely on intuition (although it remains valuable) but should also bring along pertinent data points. This might mean insights from UX research, or a proactive exploration of data (with or without assistance from a Data Scientist).

For instance, consider a scenario in which the team aims to enhance the conversion rate from the landing page view to product view by experimenting with various ideas:

Instead of immediately ideating or embracing proposed solutions, designers should seek to uncover data points. They might collaborate with UX researchers to understand how users perceive the current landing page experience and what improvements they desire. They could also dive into quantitative data alongside a cross-functional member, such as a data scientist, to extract key insights.

They might also explore metrics like page load times, scroll behavior, initial click interactions, and more. A deeper understanding of user behavior through data empowers designers to make informed decisions about the direction of their design explorations for experimentation purposes as well.

Experimentation advice for designers

Combine Intuition with Data: While formulating hypotheses, trust your intuition, but seek out data points to substantiate them. This approach streamlines your design explorations, leading to more insightful results and efficient use of time.

Stay Engaged with Experiment Results: Stay involved when experiment results are shared. Numerous experiments result in winning solutions that can still be refined before the final rollout. Conversely, many experiments yield inconclusive outcomes, providing room for further improvement.

Embrace Curiosity about Data: Cultivate curiosity about data interpretation and analysis: The better you comprehend data insights, the more effectively you can steer your design decisions.

Internalize and Share Knowledge: Absorb the lessons learned from experiments and contribute to knowledge sharing within your team and organization.

Follow Statsig on Linkedin

Closing thoughts

Experimentation might appear daunting, sometimes overwhelming designers with its scientific connotation. Nevertheless, designers can extract significant value from experimentation and actual user data, sometimes filling gaps left by conventional UX research.

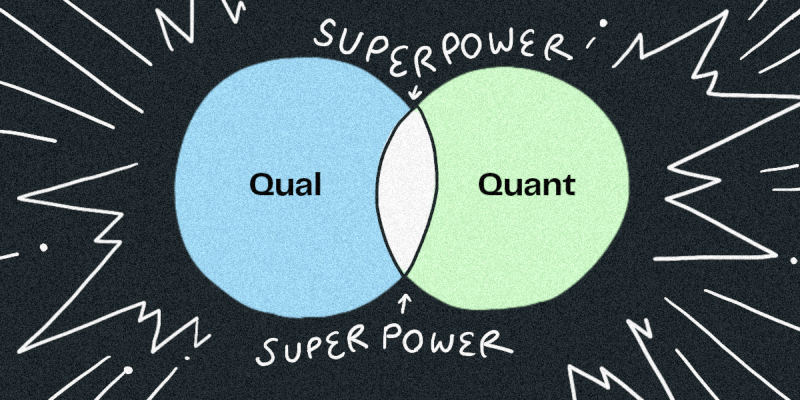

From my perspective, designers should employ data through feature testing and UX Research, either individually or in tandem. Experimentation empowers designers to test hypotheses on a substantial scale, involving real users in real-world scenarios.

Meanwhile, UXR facilitates detailed, unfiltered feedback through usability and concept testing, capturing comprehensive directional insights before significant development endeavors are undertaken by engineers.

As experimentation tools and methodologies become further ingrained in the culture of product teams and companies, designers gain increased opportunities to harness them as tools for deriving insights that shape exceptional user experiences.

When it comes to designing variations for testing, designers should approach changes with a clear purpose. While simple alterations like button color or placement are straightforward, complex changes such as layout adjustments demand deliberate consideration. Designers should contemplate the outcomes they aim to achieve from the experiment and anticipate what insights they can garner to guide further iterations.

Frequently, the inspiration for new user experiences originates from qualitative and/or quantitative data, which designers can help uncover and leverage to their advantage.

(Thanks to Cat Lee, our Brand Designer, for the amazing set of illustrations)

Get a free account