Faster Pulse, Environments in Overrides, Experiment Duration by Exposures

Happy Friday, Statsig Community! To cap off a beautiful week here in Seattle ☀️, we have a number of exciting launch updates to share:

🕒 Fast(er) Pulse

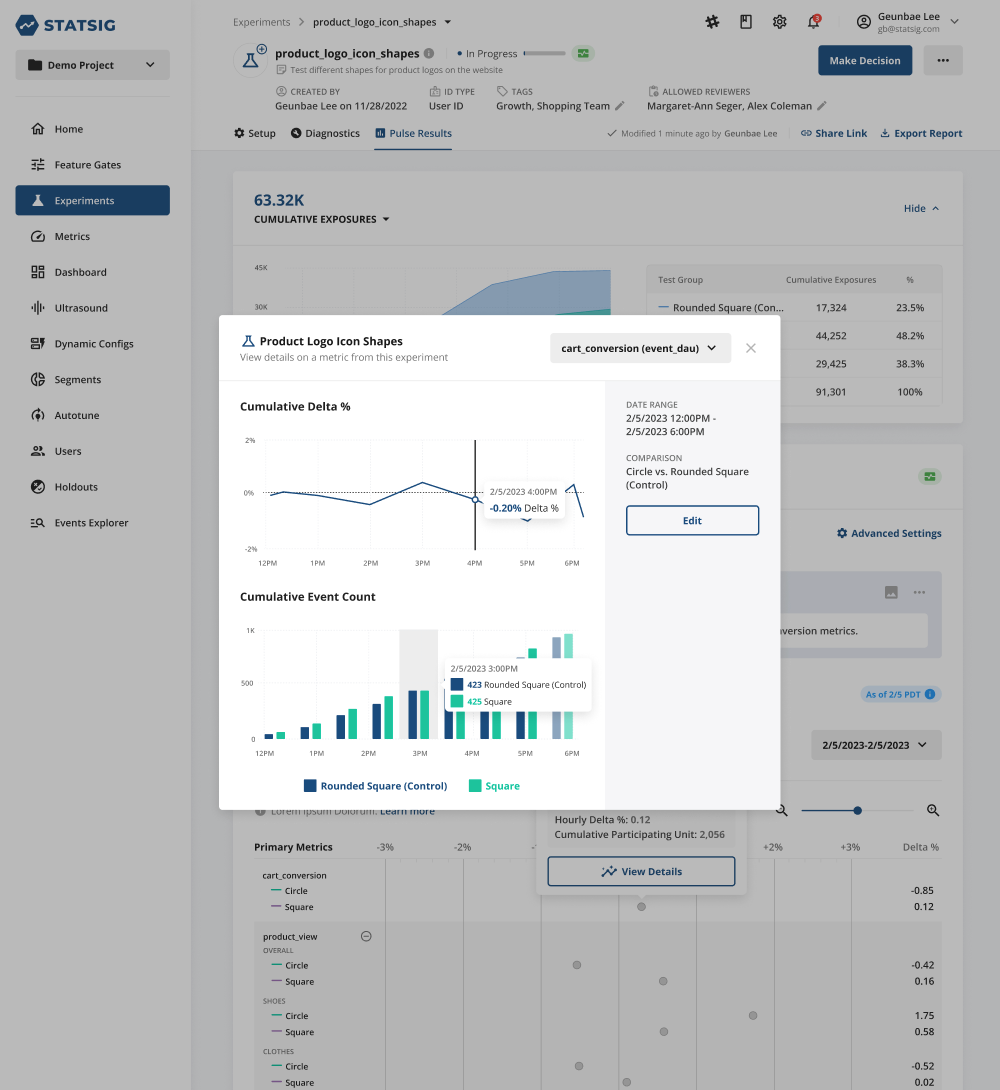

Todate, when you launch a new feature roll-out or experiment, you have to wait 24 hours to start seeing your Pulse results. Today, we’re very excited to shorten that time significantly with the launch of more real-time Pulse. Now, you will see Pulse results start to flow through within 10-15 minutes of starting your roll-out or experiment.

A few things to consider-

For the first 24 hours, results do not include confidence intervals; early metric lifts are meant to help you ensure that things are looking roughly as expected and verify the configuration of your gate/ experiment, NOT make any launch decisions

The Pulse hovercard view will look a bit different; time-series and top-line impact estimates will not be available until the first 24-hour daily lift calculation

☁️ Environments in Overrides

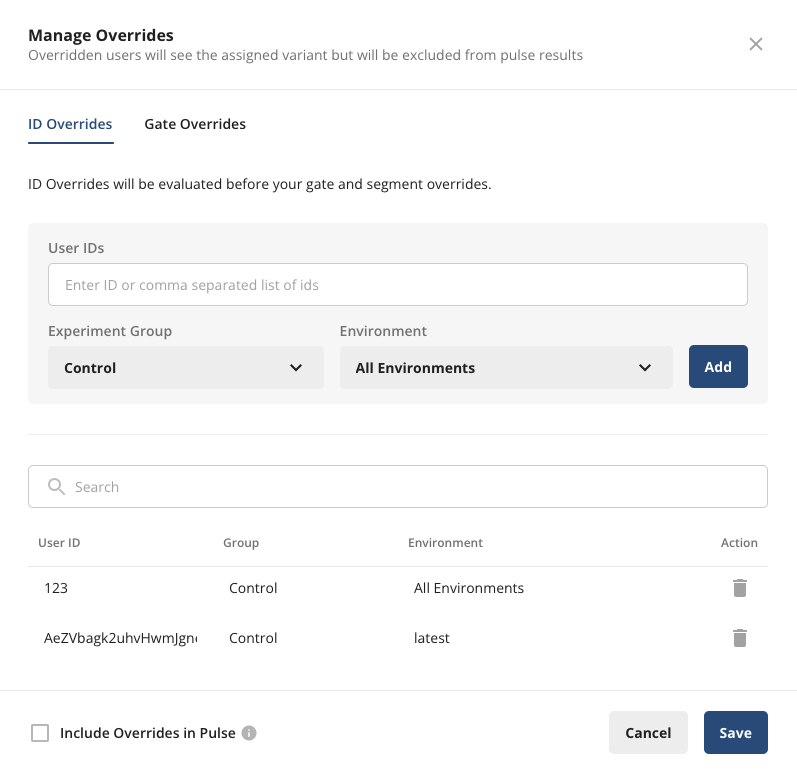

At some companies, an user may have a different ID in different environments and hence want to specify the environment to override a given ID in. To enable this, we’ve added the ability to specify target environment for Overrides in Experiments. For Gates, you can achieve this via creating an environment-specific rule.

⌛ Experiment Duration by # Target Exposures

(vs. Strictly Time Duration)

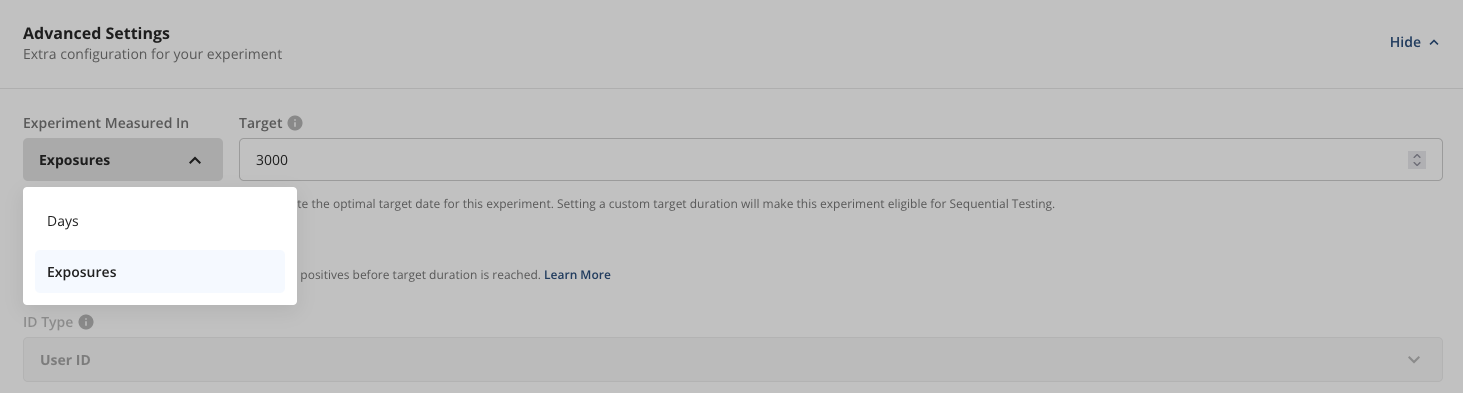

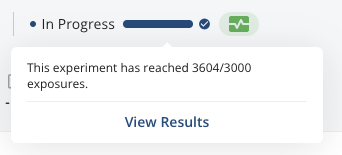

We’re introducing more flexibility into how you can measure & track experiment target duration. Now, you can choose between setting a target # of days or a target # of exposures an experiment needs to hit before a decision can be made.

To configure a target # of exposures, tap “Advanced Settings” in Experiment Setup tab, then under “Experiment Measured In” select “Exposures” (vs. “Days”). The progress tracker at the top of your experiment will now show progress against hitting target number of exposures.

See our docs for more details.

Loved by customers at every stage of growth