Product Updates

💻 Web Analytics

Web Analytics makes it dead simple to track and watch key measures for your website.

Debuting on our suite of Javascript SDKs, Web Analytics auto-captures key user-generated events like page views, errors, page performance stats, clicks, and form submits. With each of these auto-captured events, we include key user and website page metadata, making advanced exploration easy out-of-the-box.

Once you’ve gotten up and running with Web Analytics, you can easily:

Explore your website’s engagement via Metrics Explorer

Look at your user engagement trends, via Statsig’s auto-generated suite of User Accounting metrics including DAU/ WAU/ MAU, stickiness, retention, etc.

Curate and share your own custom set of dashboards, applying custom filters and aggregations to your suite of auto-captured events

Read more in our docs & let us know if you have any feedback or questions!

🎯 Inline Targeting Criteria

Often, you may want to experiment on a specific group of people as defined by targeting criteria. To-date to accomplish this you’ve had to define a Feature Gate with your targeting criteria and then reference this Feature Gate from the experiment.

While this is useful if your targeting criteria is relatively common (and you’ll want to reuse it again in a future experiment or rollout), this can introduce extra configs and overhead if this targeting criteria is only serving the experiment in question.

We say- extra overhead and unnecessary configs cluttering up your catalog BE GONE!

Today, we’re starting to roll out the ability to define targeting criteria inline within your experiment setup. This will be accessible via the same entry point you can select an existing Feature Gate to target your experiment against (don’t worry, that capability isn’t going anywhere).

Read more in our docs here and don’t hesitate to reach out if you have any questions!

Stratified Sampling (for B2B experiments)

For B2B experiments on small sample sizes (or tests where a tail-end of power users drive a large portion of an overall metric value), randomization alone doesn't cut it. Your Control and Test groups may not be well balanced if your whales end up in either group.

This new Statsig feature meaningfully reduces false positive rates and makes your results more consistent and trustworthy. It tries a 100 different randomization salts and then compares the split between groups based on a metric or classification you provide to find the best balance. In our simulations, we see around a 50% decrease in the variance of reported results.

Read more about using the feature here, or learn more about how it works here. This is now rolling out on both Statsig Cloud and Warehouse Native on Pro and Enterprise tiers.

Tables as Metric Source

When we created Metric Sources, we supported arbitrary SQL queries to maximize flexibility. We've now added support for directly pointing to tables for when that's what you want.

Using tables directly is simpler and improves performance. We can be pick just the columns we need to operate on even when the table is very wide. Complex filters can be applied efficiently without your SQL engine first trying to materialize a CTE.

An added perk with using Tables as a Metric Source is being able to use formulae. You can apply simple SQL transforms to columns (e.g. convert from cents to dollars by dividing by 100) or alias them to make them more discoverable.

🥷🏼 Statsig ID Resolver

Ever pop into a Feature Gate to check if a user is overriden and are hit with a wall of long, cryptic IDs that mean absolutely nothing to you? Yep, we have too.

Introducing Statsig ID Resolver. Now you can host a mapping of IDs to real names (or any string, really) to make leveraging IDs easier throughout the Console.

To set up Statsig ID Resolver, navigate to “Settings” → “Project Settings” → “Integrations” and scroll all the way to the bottom to find “Statsig ID Resolver”. Check out our documentation for full instructions to get ID Resolver set up.

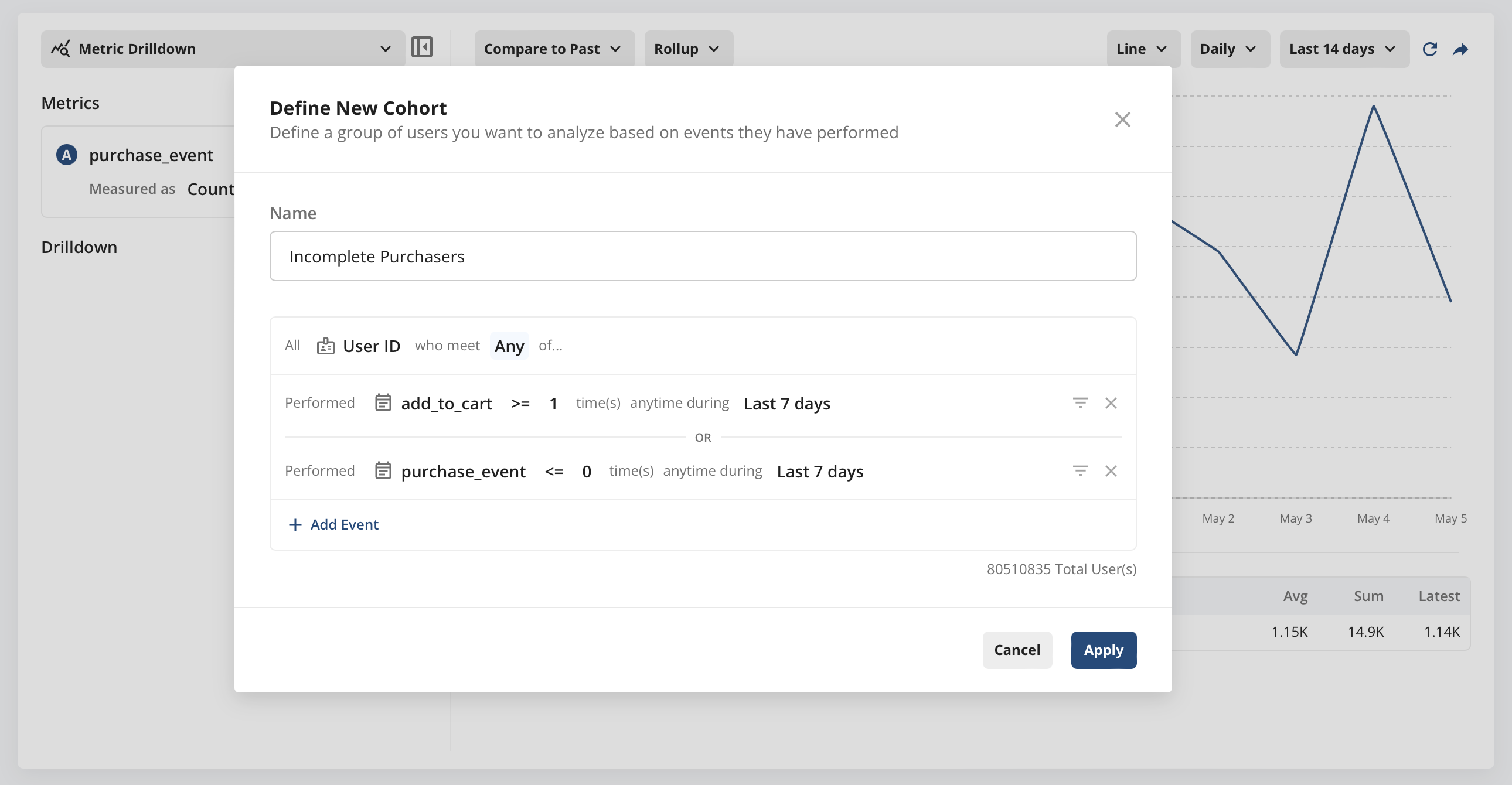

Multi-Event Cohorts

We are excited to introduce a new feature to product analytics in Statsig: Multi-Event Cohorts. This new feature allows you to define user cohorts based on multiple events, enabling the creation of highly specific and insightful user groups.

With Multi-Event Cohorts, you can now include users who have engaged in various combinations of activities, such as users who both completed a purchase and subscribed to a newsletter, or users who viewed a product but did not add it to their cart. This capability provides a powerful tool for dissecting user behaviors and understanding the different ways users interact with your product.

By utilizing Multi-Event Cohorts, you can craft more detailed and useful insights into user engagement, helping you to improve strategies for user retention and product development.

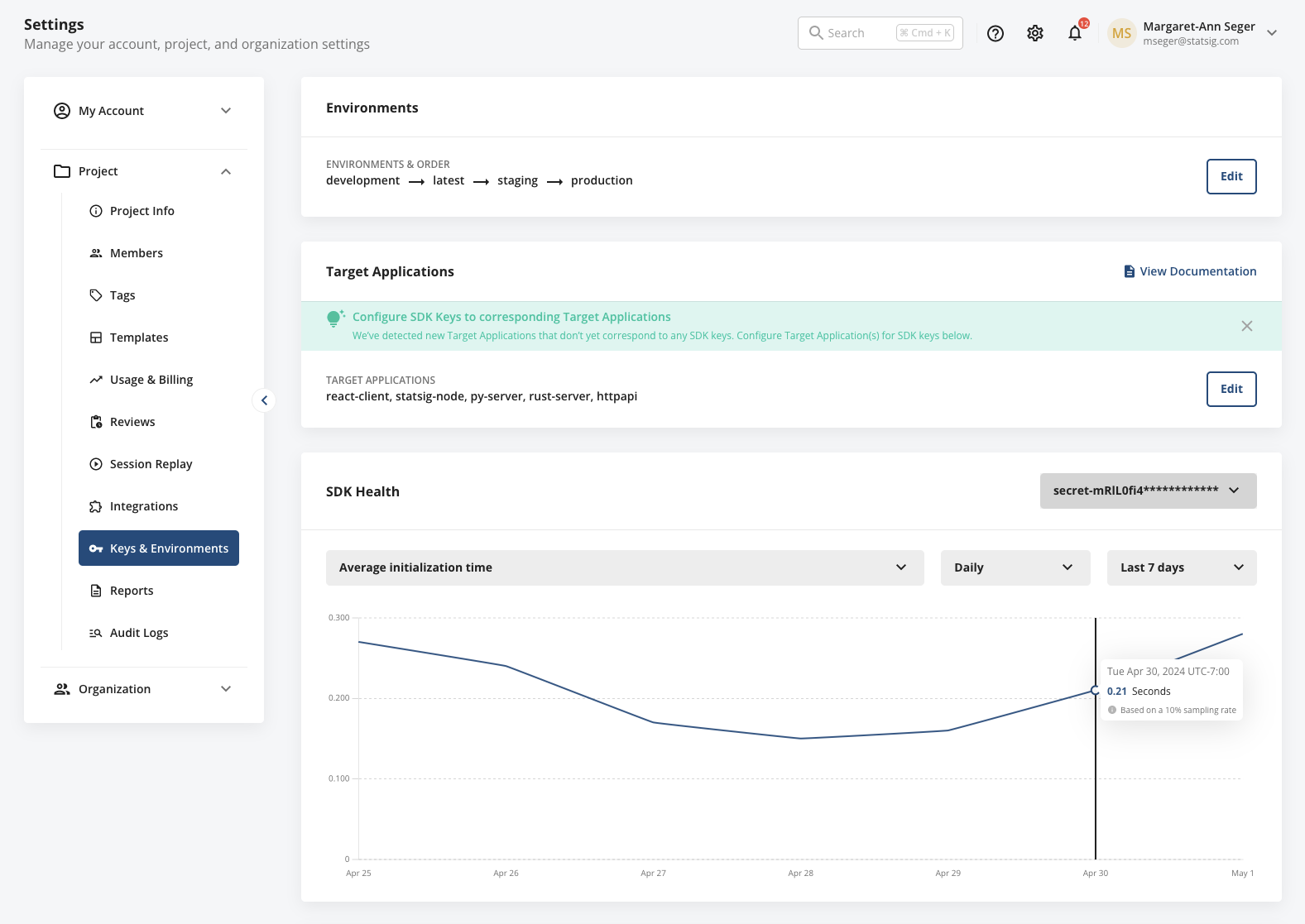

🫀 SDK Health Visibility

While the Statsig Console is the face of most customers’ experience with Statsig, much of the power of Statsig happens “behind the scenes” via Statsig’s SDKs. Today, we’re excited to unveil a set of SDK performance stats to enable customers to stay abreast of their SDK health, including:

Download config spec size

Success rate

Average initialization time

… with more stats coming soon!

These stats are accessible alongside your SDK keys in Settings → Project Settings → Keys & Environments and can be viewed hourly or daily, over any custom date range.

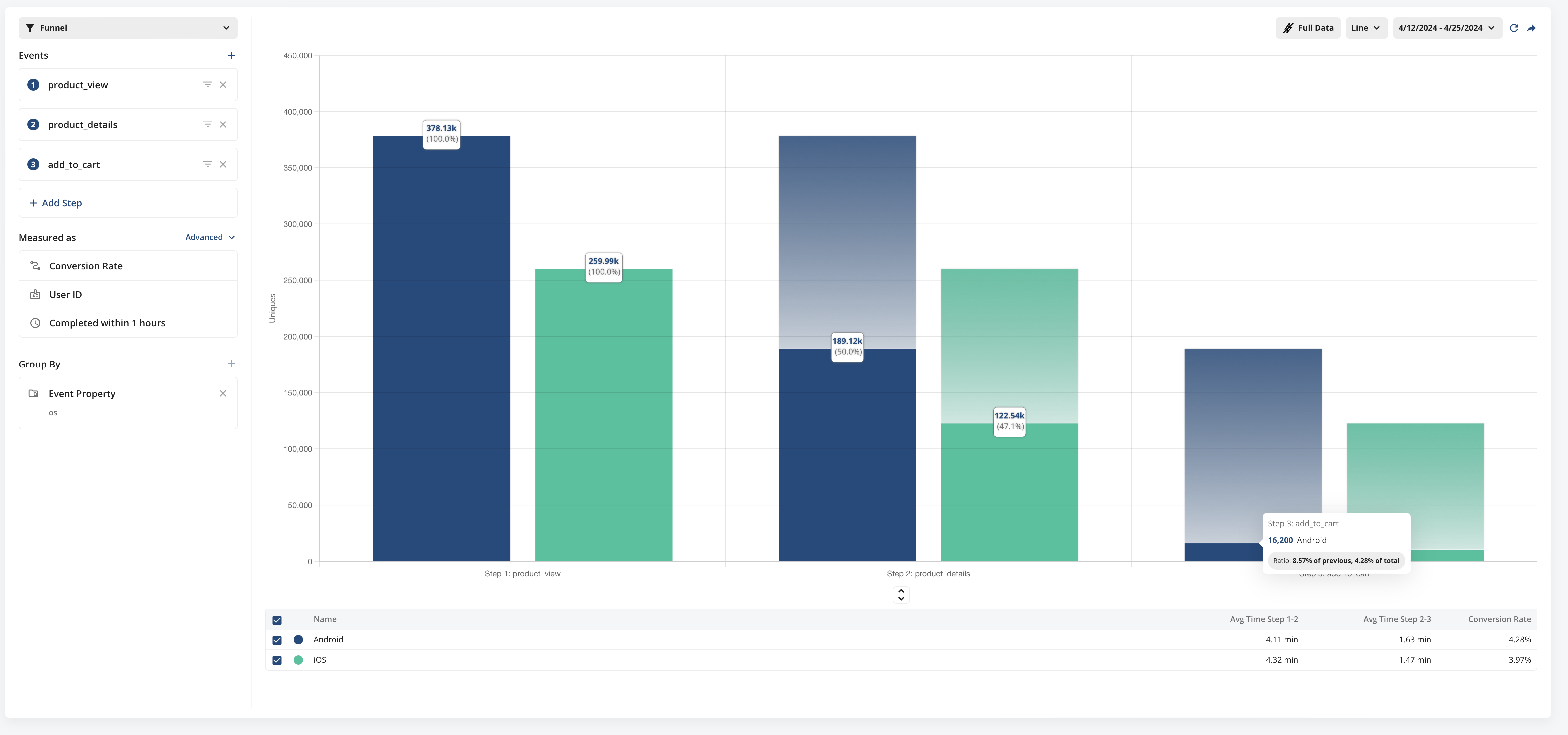

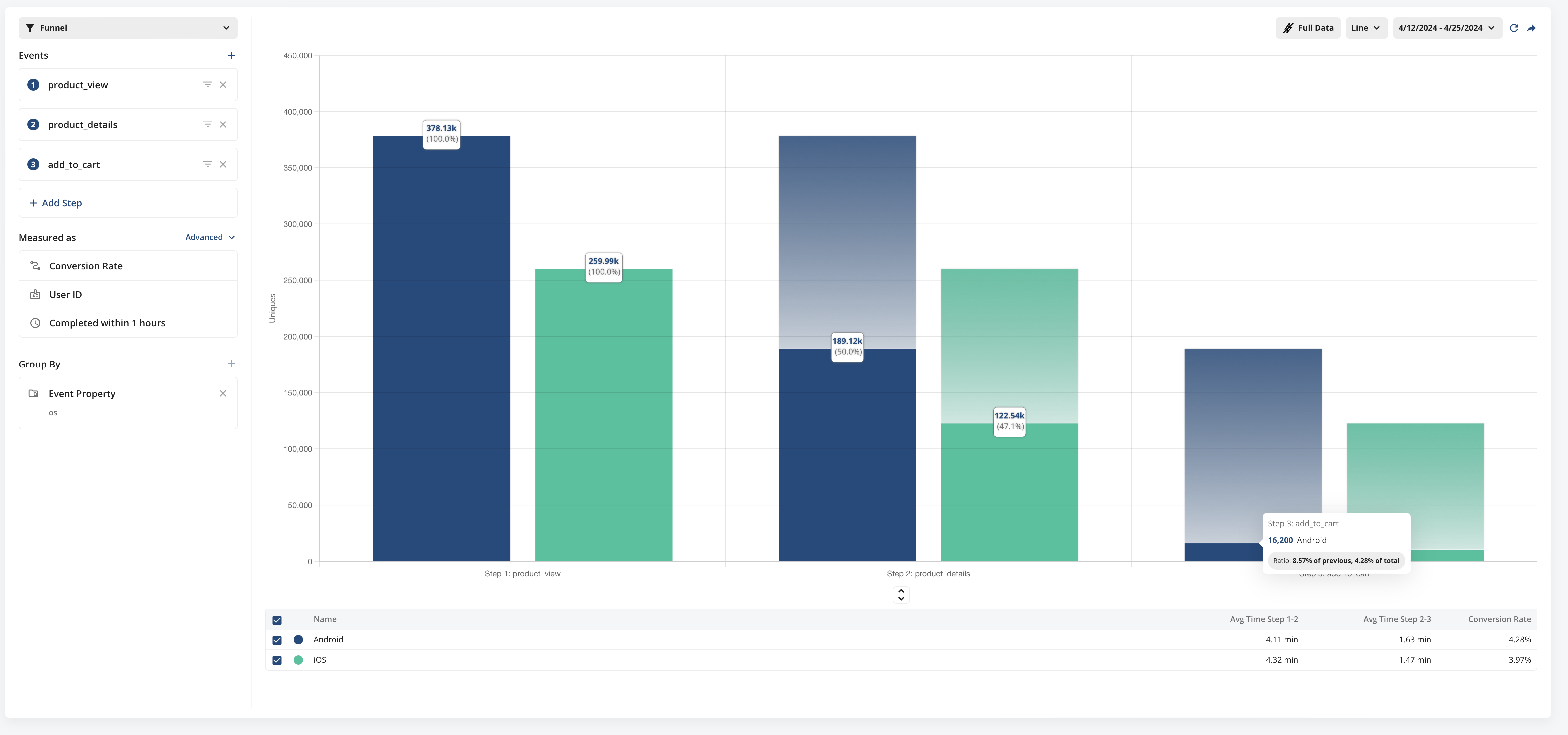

Funnels 2.0

We've recently launched our most significant updates to Funnels in Metrics Explorer to date. Funnels now have more useful configuration options, more information about people convert through your funnels, two new ways to view conversion information, and a better overall look and feel.

Funnel Views

You can now view funnels in three ways, Conversion Rate, Conversion Rate Over Time, and Time To Convert Distribution.

Conversion Rate

In this classic funnel view, you can visualize what portion of users convert through each step a funnel you define. This view is useful for understanding exactly where funnel drop offs are occurring and helps you focus efforts to make targeted improvements.

Conversion Rate Over Time

This view allows you to understand how your funnel performance is trending over time. This view is useful in detecting unexpected changes in funnel conversion, or validating that changes made to improve conversion are having the intended effect.

Time to Convert Distribution

This view allows you to understand the general distribution of time it takes to convert through the funnel. We show the percentage of converting funnels that convert within each of the time ranges on the x-axis. This is useful in understanding how long it generally takes people to convert through the funnel.

New Configuration Options

You now have granular control of the funnel conversion window. Set it anywhere from 1 second to 7 days.

In the advanced menu you can also select if the events in the funnel must be completed sequentially, or if completing the steps in any order counts as a conversion.

Time to Convert Information

In addition to the new Time-To-Convert funnel view, in the default Conversion Rate view, we now show time-to-convert information between each step of the funnel.

Coming Soon

We're planning to add additional configuration options very soon including

Unique Conversion or Overall Conversion - choose whether to only count the unique individuals that converted through the funnel, or total funnel conversions that occurred

Strict Step Ordering - Require events in the exact order defined (with no events between)

Exclusion Events - Exclude Funnels that contain specific events between steps

Hold Property Constant - Only count funnels where a given property remains constant through each step.

Integration with Session Replays - View users who didn't convert and see replays that help you gain contextual understanding for why they didn't convert.

Experiment Assignment (Exposure) Filtering on WHN

For some experiments, assignment and exposure are separate events (e.g. you may need to generate a web page that has an experiment at the bottom of the page. Users are exposed only if the user scrolls down and sees the experiment).

If you are using an assignment tool that logs an exposure event before users are actually exposed to the experiment, you need to filter down the list of users in the assignment source to people who actually saw the experiment.

You can filter the assignment source data based on a Qualifying Event. You can filter assignments to only include subjects that have either generated (or not generated) this qualifying event.

You can find these settings in the Advanced options of the Setup page when creating an Analyze-only Experiment.

Using Statsig SDKs? You don't need to filter!

When using Statsig SDKs you can use getExperimentWithExposureLoggingDisabled on assignment and manuallyLogExperimentExposure at the point of exposure to accurately capture exposure. You don't need to "filter" or clean these exposures.

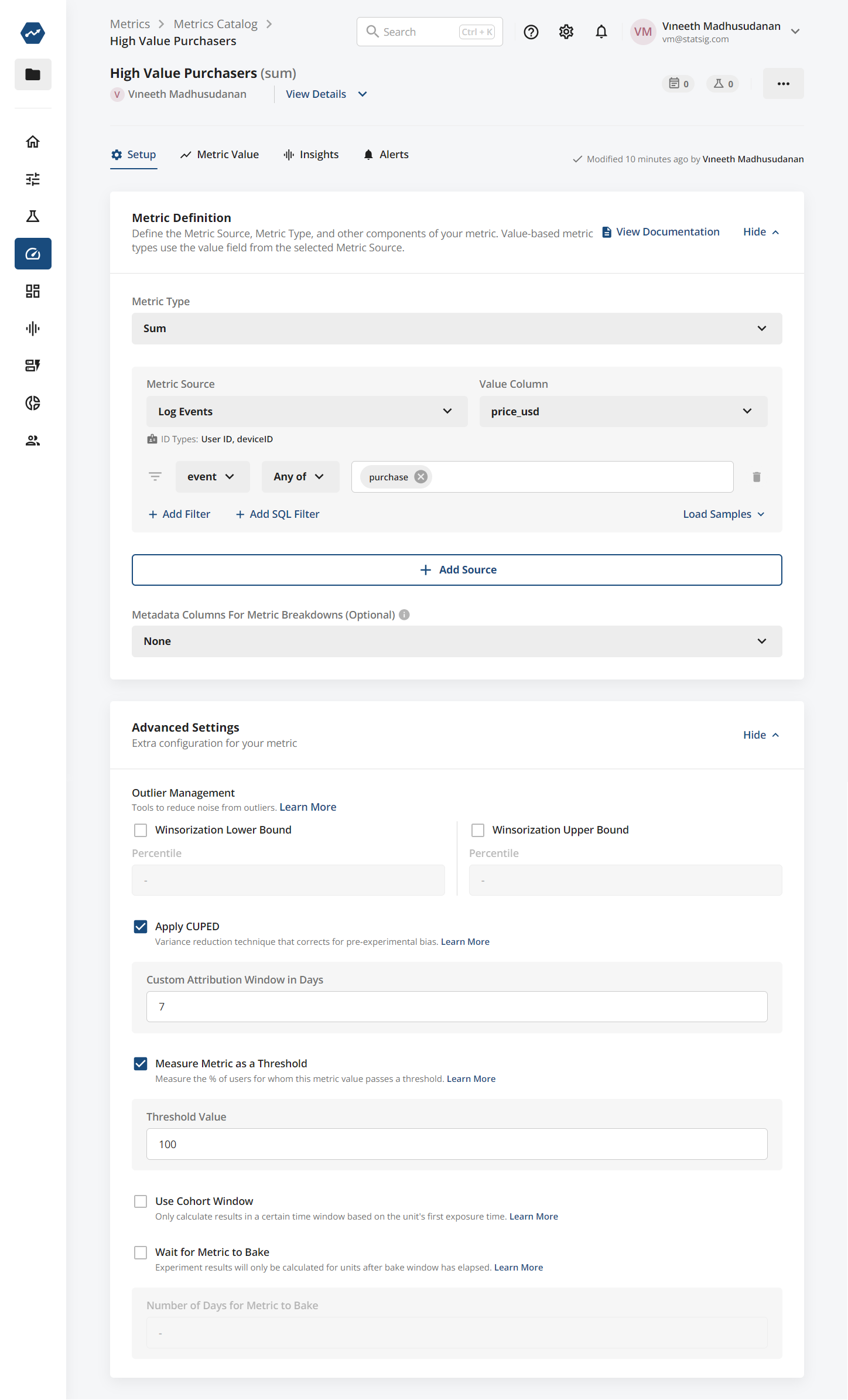

Threshold Metrics on WHN

Sum and count metrics can now be configured to use a threshold. When using a threshold, the metric will measure if the user's sum or count metric surpassed a given threshold. This is usually combined with cohort windows to create a metric like "% of users who spent more than $100 in their first week".

This is currently available on Statsig Warehouse Native. Learn more.

Loved by customers at every stage of growth