written with Bella Muno (PM @ Tavour)

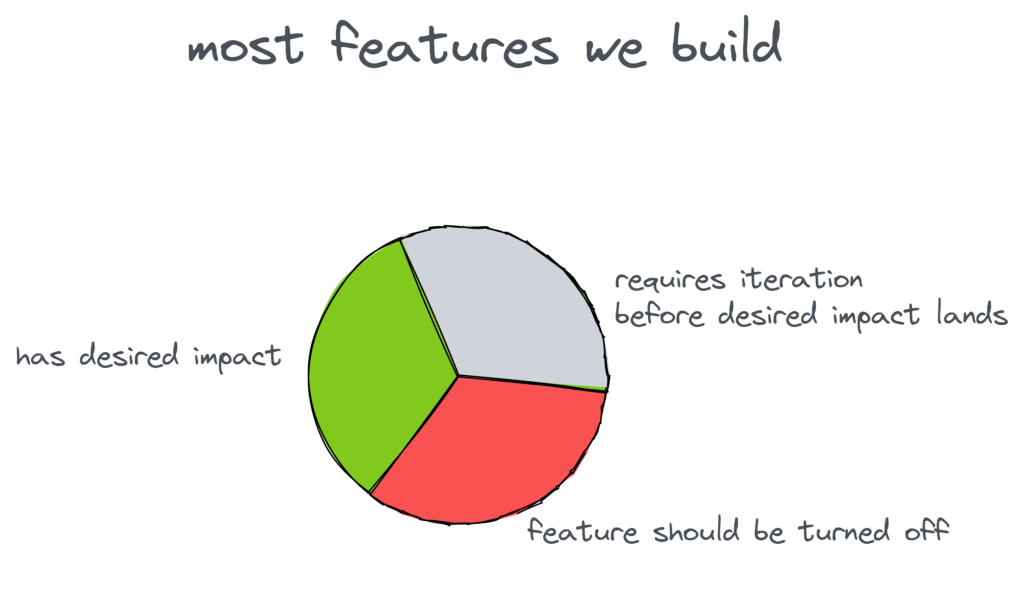

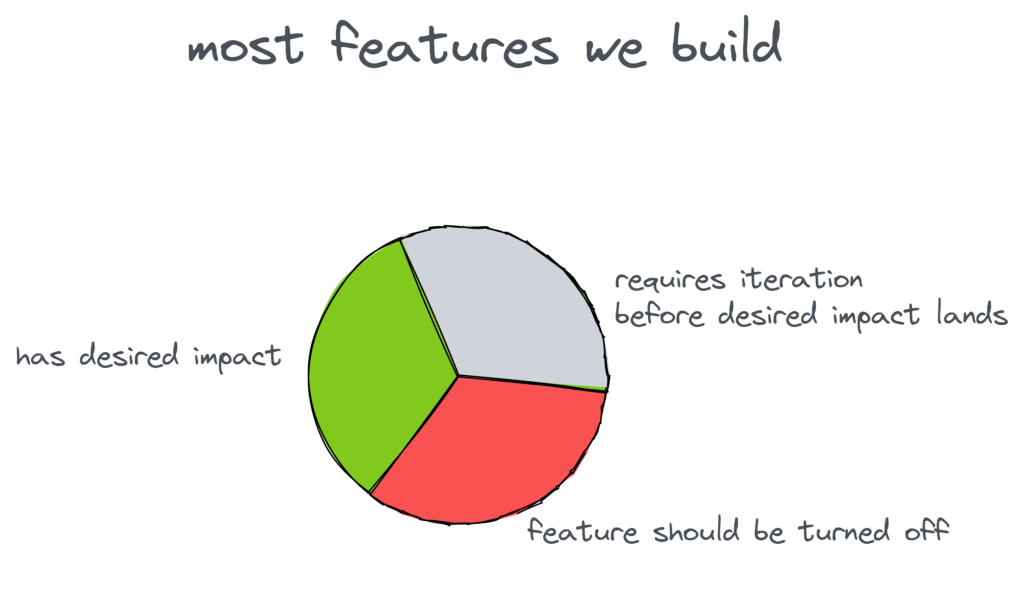

Every feature is well intentioned but…

Every feature is well-intentioned… that’s why we build them. However, our experience is less than a third create positive impact. Another third of the features require iteration before they land the desired impact — users might not discover it or are confused by it. The final third of the features are bad for users — they have a negative effect on product metrics, and the best bet is to unship them.

This split varies with both product maturity (it’s harder to find wins on a well-optimized product) — and the product team’s insights and creativity. Yet, these three buckets almost always exist. Failing to critically analyze prevents knowing which bucket a feature is in.

How can they do this?

Automatic A/B Tests

Statsig turns every feature rollout into an A/B test with no additional work. In a partial rollout, people who’re not yet getting the new feature are the Control group of the A/B test. People who are getting the new feature are the Test group. By comparing metrics you’re already logging across these groups, Statsig can tell if your feature rollout is impacting KPIs and by how much. Statistical tests identify differences between the groups that are unlikely due randomness and noise.

This type of testing enables product teams to understand the impact of features and determine which of the three buckets above the feature is likely to be in!

Simple feature flagging systems let you turn features on and off and control gradual rollout, but don’t offer automatic A/B tests and this analysis. Simple experimentation systems let you do something similar — but introduce too much overhead to to make them practical to use on every feature rollout. Hence, Statsig :)

But you mentioned beer…

Tavour is an app that helps fill your fridge with unique, craft beers you can’t find locally. They use Statsig to manage features and run experiments.

Address Auto-complete

A problem the product team at Tavour wanted to solve was friction in the user onboarding process. This friction prompted the team to build an “Address Auto-Complete” feature. They expected this feature to increase speed and accuracy in the user sign-up flow — resulting in more users signing up.

They put this behind a Statsig feature gate and rolled it out to a small % of users to make sure nothing was broken. What they saw next surprised them!

The problem

Tavour was expecting “Address Auto-Complete” to increase the proportion of successfully activated users. Instead — they found users exposed to this feature churned out at a higher rate than users in the control group who didn’t see this feature.

Initial suspicion lay with the quality of data. Could an issue related to logging bugs or bad data pipelines be causing this? After vetting this and not finding issues, the team looked at other metrics impacted by the rollout. A system event — “Application Backgrounded” had also shot up. A new feature causing users to abandon the app suggested something weird could be going on.

The insight

The Tavour team started investigating usability for the new feature. Looking at other apps, they noticed that they displayed more address results to the user than the Tavour app did without scrolling. They formed a hypothesis that the partial list of autocomplete suggestions displayed in the Tavour app did not convey to users that they were additional suggestions. When they sliced this data by phone size, they saw a marked difference between small and large phones. With a small phone, fewer address suggestions were visible without scrolling. With a large phone, more address suggestions were visible. This finding provided evidence for the hypothesis that fewer addresses displayed confused users and prompted them to abandon registration.

The Result

Tavour decided to tweak the feature to let users see more auto complete suggestions without having to scroll.

“new user activation rate increased by double digit percent points”

The revised feature increased new user activation rate, giving them the confidence to finish rolling out this feature!