Build like the best AI product teams

Companies like OpenAI and Notion are following a 3-layer framework to validate and ship AI products with confidence.

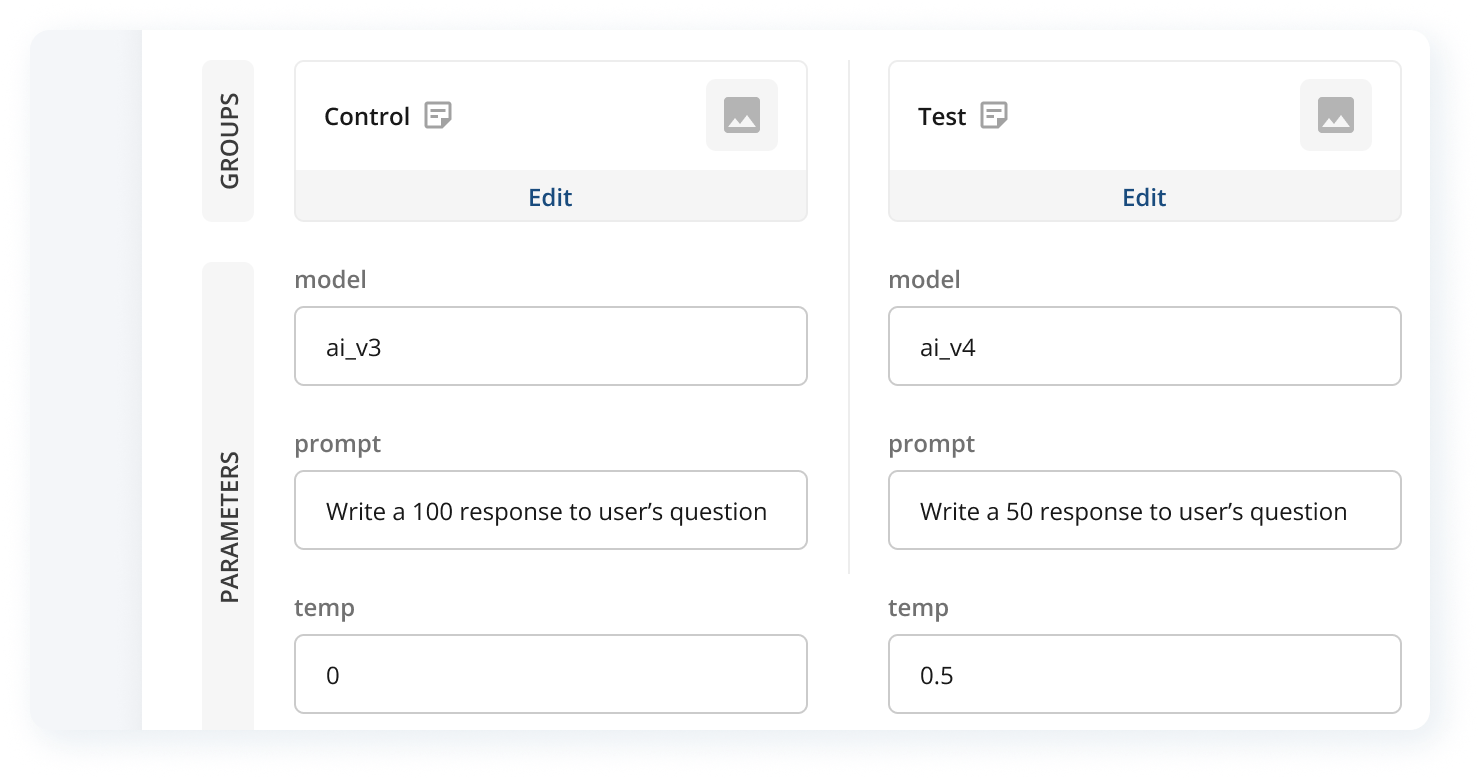

Build a differentiated AI product

The difference between good and great comes from systematic experimentation. Compare any model or input head-to-head to improve the usefulness of your AI application

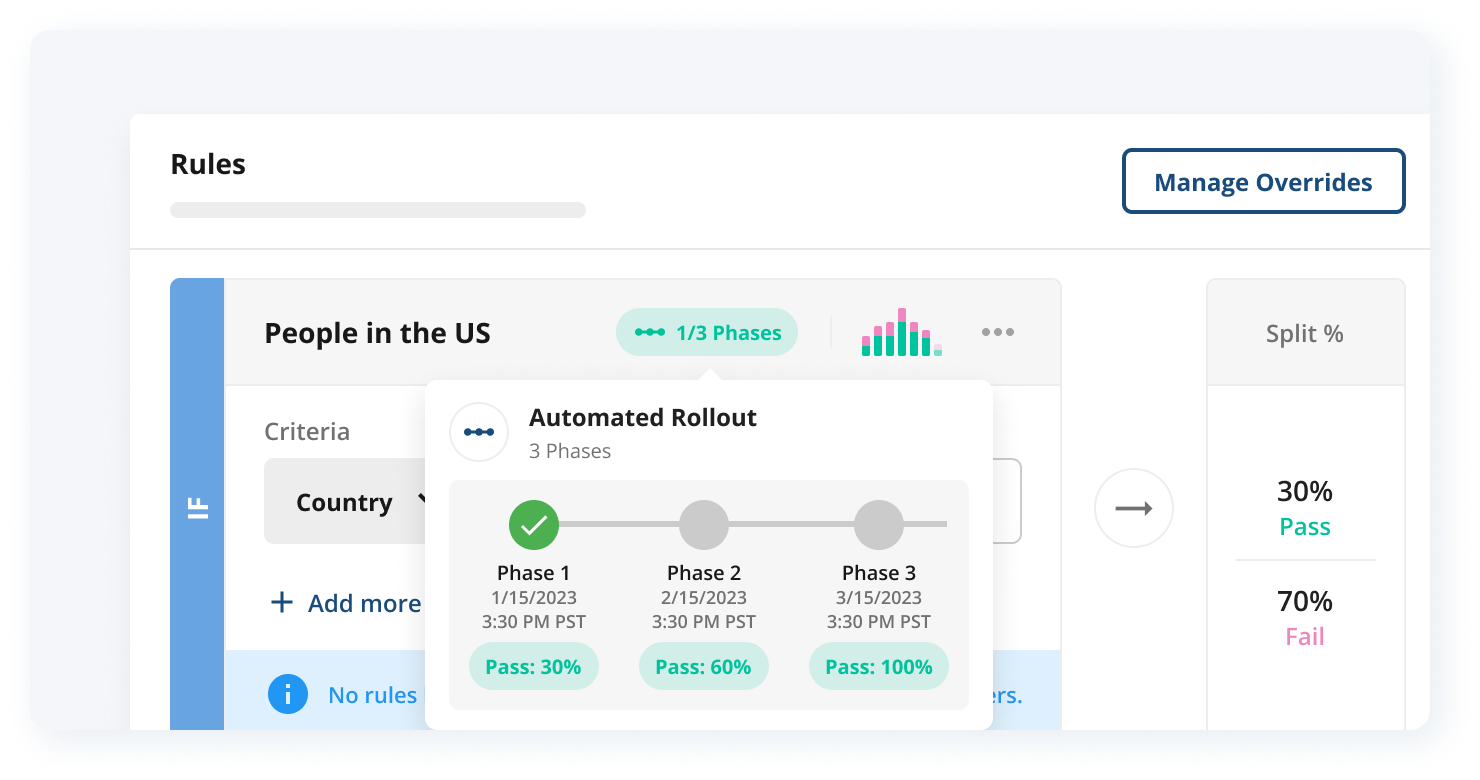

Continuously rollout and optimize models

Instantly deploy new models, test combinations of models and parameters, and measure core user, performance, and business metrics

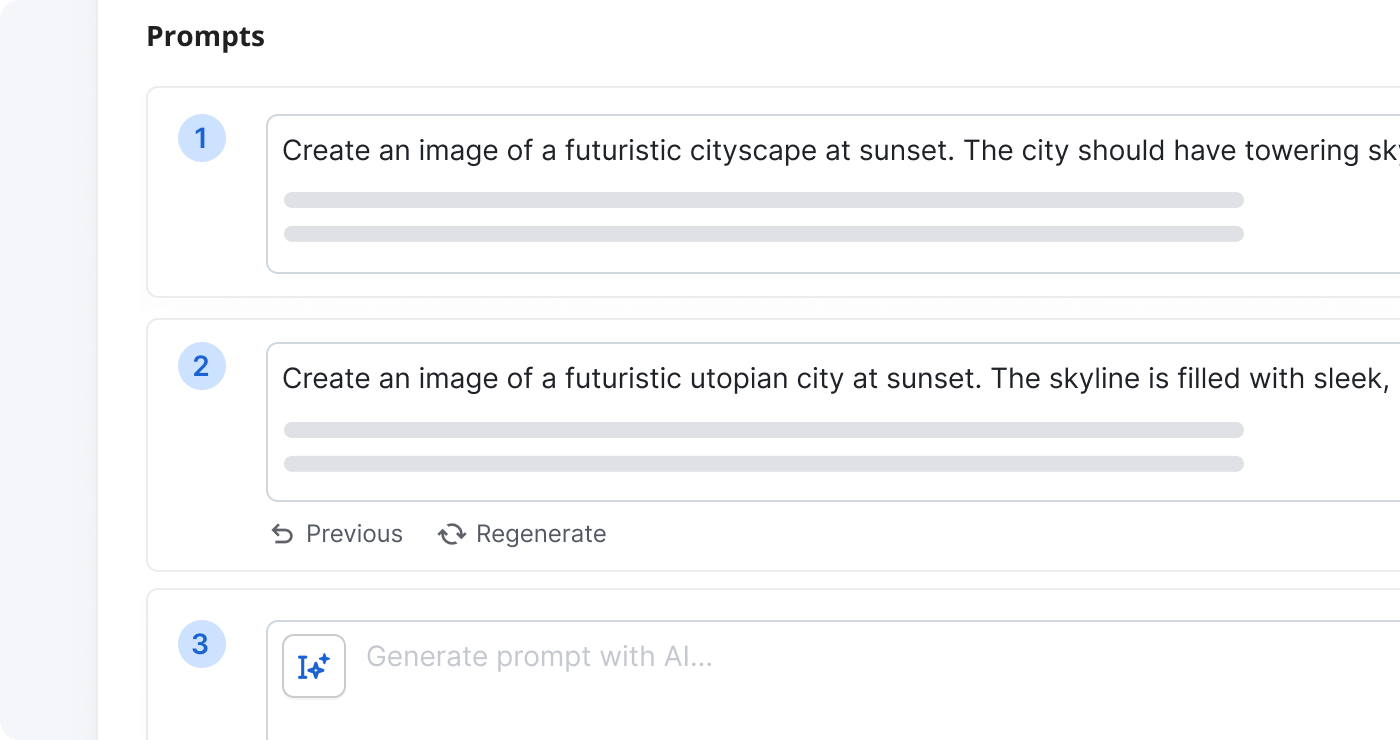

Hybrid offline and online evaluations

Benchmark your prompts offline using your evaluation datasets, then ship to production as an A/B test. By linking evals and real-world testing, your team can measures real impact