Whatnot goes from 0 to 400 annual experiments

0 to 250

Significant Increase

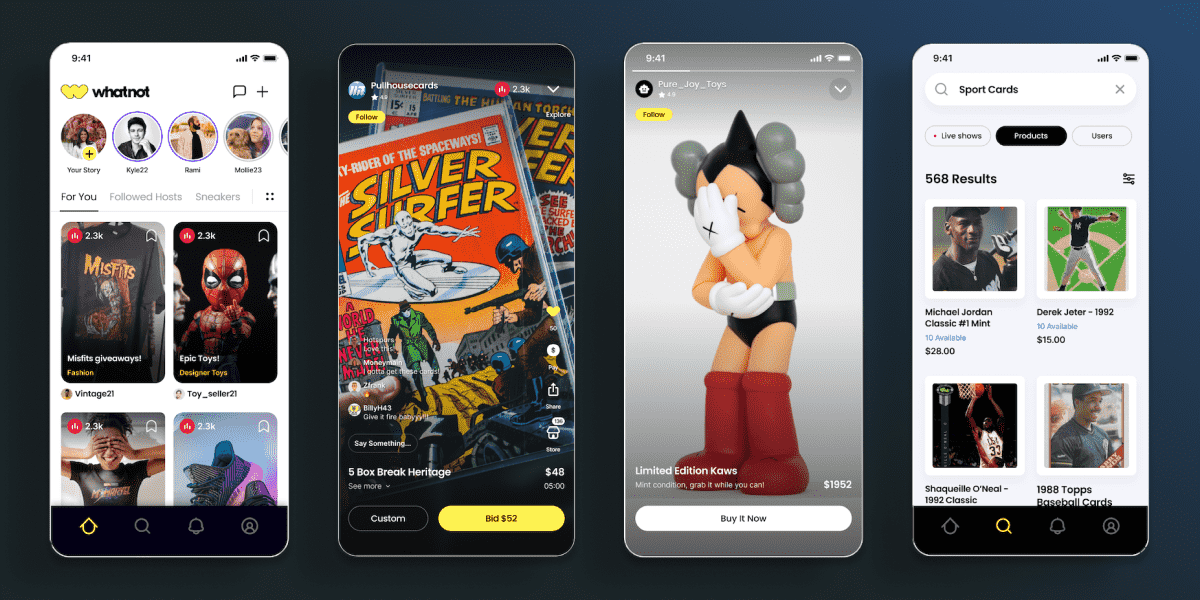

Whatnot is the largest livestreaming shopping platform in the United States and Europe, selling various kinds of products. As a marketplace, its business model is complex on both the buyer and seller sides. Building a data-driven culture with Statsig has enabled Whatnot to move fast, innovate confidently, and scale its platform.

Year 1: Accelerating release cycles by testing in production

Fast forward to Year 2, Scaling from 0 to 250 Experiments in 3 Quarters

The problem at hand

“Testing new features in production was incredibly difficult & time-consuming.” That’s how Rami Khalaf, Product Engineering Manager at Whatnot, described rolling out new features at the organization. His engineering team was bogged down with managing divergent release cycles for each app platform, rolling out new builds, and waiting for users to upgrade before learning how the new releases were performing.

“Not only did slow release-cycles get in the way of delivering value to users, they also took invaluable time from our small, single digit engineering team”, Khalaf explained.

The primary reason for the engineering bottleneck, according to Khalaf, was a function of Whatnot’s real-time, ephemeral livestreams. Sellers start streaming whenever they want, list items for sale and can stop streaming at any time. As a result, testing new features that relied on live interaction was incredibly difficult & time-consuming.

Khalaf needed a solution that enabled his team to quickly and confidently test the impact of new releases, in a real production environment, before rolling out to their entire population of users.

The Solution

Internal dogfooding with Statsig’s feature flags

To solve these challenges & speed up release cycles, Khalaf crafted an “Internal Dogfooding” framework at Whatnot. Using Statsig Feature Flags, his team can now quickly ship every rollout into a real production environment, strictly to internal Whatnot employees only, to test how it lands.

Everyone on the Whatnot team dogfoods the new release to quickly identify major issues & gather initial feedback. By using Statsig to gate new features to internal Whatnot employees only, in a real production workload, Khalaf & his team can confidently test and polish features before they roll out to real users.

Canary releases with Statsig feature flags

Internal dogfooding is one thing, but to ensure total confidence in new rollouts, Khalaf also needs to gather feedback from real users. To do this safely, his team uses Statsig to enable new features to a very small subset of all public users- say, 5% of their total population (their “canaries”).

Using Statsig Metrics , Whatnot engineers can immediately see the impact that their new release has on their key business metrics, accelerating their time from building → learning → iterating.

Rami Khalaf

Using Statsig Feature Flags, Khalaf and his team could quickly uncover issues, roll back the feature with ease, and deploy a fix within hours. Whatnot repeats this process for new releases until the team is confident that each and every new feature is ready to be rolled out to all public users.

Year 2: Going from 0 to 250+ experiments in 3 quarters

A SEV that forced a pivot from feature flagging to experimentation

Todd Rudak, Director of Product Data Science & Analytics, recalled a pivotal moment a year into the company’s partnership with Statsig. At that time, Whatnot extensively used feature flags. With their engineering team still small, they felt confident they could quickly detect any regressions, identify the problematic feature, and roll it back. “We thought we didn’t need to experiment much because we were small, nimble, and agile,” he explained.

However, by early April, as their growth matured, they encountered a SEV that shifted their perspective. Todd shared, “We were rolling out a series of new features, some of which were major changes.” Among these was the relaunch of the asynchronous marketplace to complement live streaming, alongside modifications to homepage recommendation algorithms and UI components. “That week, we introduced three major changes and a myriad of smaller updates, and lo and behold, we faced a SEV!”

Todd outlined the challenges: “We attempted to pinpoint which feature changes were causing the SEV by dissecting every dashboard in the following weeks. We faced numerous red herrings and difficulty in pinpointing the issues with confidence, leading to rolling back and retesting individual features.” This disrupted Whatnot’s development cycle as they tried to determine if a change had inadvertently cannibalized other features. The episode prompted a significant shift in company culture, leading them to prioritize building an experimentation program.

Kickstarting the experimentation journey

Whatnot had some tailwinds that helped them quickly adopt a culture of experimentation. They had recently launched a standardized event bus to trigger events with better data quality and pump them into Statsig.

“We went from a baseline of core metrics to knowing hundreds of different events and changes to user behavior, allowing us to determine if something was a positive or negative change to the user experience.”

Their first couple of months were all about developing the principles for scaling experimentation and defining what the culture would look like. “We didn’t want to be a company that was just chasing the movement of metrics, but rather using metrics as a strong proxy for the user experience and a learning tool. What behaviors do we want to encourage and discourage? We use Statsig as a way to catch regressions and learn throughout the process.”

Whatnot used Statsig to define their primary metrics and also monitor secondary events that they used to observe user behavior across the ecosystem and shape their narrative. “Hey, users are doing more of this and less of something else. Does this make the experience better for buyers and sellers?”

Building on the gains and developing the experimentation mindset

“The fact that we were already using feature flags and had fast release cycles made it easier to adopt experimentation,” noted Todd.

However, not everyone was experienced with large-scale experimentation, so they created onboarding programs for new hires to learn about their tech stack and introduced experimentation as an integral part of onboarding. “The goal was to be a self-serve data company,” explained Todd. The aha moment came when engineers realized the value of being able to isolate the impact of their features and measure that impact.

Todd Rudak

“Thanks to Statsig, the focus for the team was on delivering impact and making the product better for users.”

Scaling up: From 0 to 250+ experiments in three quarters drives learnings and core metrics!

Over the next three quarters, Whatnot conducted 250+ experiments, spanning various teams from Onboarding Growth to Search & Discovery, each with differing sample sizes and data availability. “We developed a learning mindset focused on understanding changes in user behavior,” Todd explains. Below is a selection of their notable use cases and wins along the way:

1. Refreshing recommendations to boost gross merchandise value (GMV)

“Live selling content can be ephemeral,” quipped Todd. When sellers exit a stream, users default back to the homepage, where they were seeing impressions for live streams that had already ended, reducing product purchases.

“Through experimentation, we determined how often to refresh the content on the homepage to ensure users were consistently receiving fresh content. This strategy led to a significant increase in GMV,” explained Todd.

2. Improving new user activation

Todd described the key activation flows for their new users and how experimenting with Statsig helped these users become deeply engaged with the platform. “Even though we are a live streaming service, sometimes users still prefer to search and find a product on their own.”

Initially, Whatnot’s offline analysis led them to funnel users towards live streams rather than searches. However, they soon realized the greater importance of the search function than they had initially thought.

“We were overlooking the importance of the null state of the search page until we started to understand the patterns of when and how people search,” Todd explained. He noted that the first purchase is a critical driver of stickiness in e-commerce. “We could pre-populate the search results based on the Google Ad they clicked or direct them to the seller’s homepage if it was a referral. By experimenting with different ways to pre-populate the search results using Statsig, we were able to connect the dots for users better than before and, as a result, observed increases in user activity and first-time purchases.”

3. Driving ecosystem growth on both the buyer and seller side

While the majority of their experiments have focused on the buyer side, Whatnot also conducts experiments on the seller side to encourage desired behaviors, such as scheduling more live streams and sharing referral links. This strategy has helped increase engagement and growth across their ecosystem.

Whatnot focuses on driving the right behaviors to enhance its platform. For example, Whatnot tested different upranking and downranking algorithms based on the signals received, prioritizing the display of the most engaging streams to new users to make a strong first impression.

Todd summed up Whatnot’s approach: “We aim to be intelligent in setting up hypotheses and use Statsig to test the implementations. Then we revisit and iterate, combining the data with offline analysis.”

Migrating to warehouse native experimentation

As experimentation expanded and teams became more data-driven, Whatnot shifted focus to unlocking more value from the data stored in their warehouse. Pivoting to Statsig Warehouse Native helped reduce experimentation times while providing deeper analytical insights.

“Warehouse Native experimentation allows for faster iterations, offers more nuanced insights, and makes it easy to add another dimension and slice through it,” Todd explained.

Todd highlighted how Warehouse Native gave them better early signals. “Previously, we could only split the data between iOS and Android, but Warehouse Native has enabled dimensional analysis — we can actually go in and cut the data by attributes like buyer classification or product categories. This gives us a stronger sense of how to launch successfully to different segments of users.”

Unlocking flexibility, trust and agility

Jared Bauman, Engineering Manager - Machine Learning, noted how they achieved meaningful variance reduction on their most important metrics by changing the default CUPED lookback window.

“We changed our CUPED window from 7 to 14 days and saw meaningful variance reduction on our most important metrics. Similarly, less winsorization rates proved to be more advantageous to us because we have a bit of a skewed business.”

Thanks to Warehouse Native experimentation, Whatnot was able to gain more buy-in from teams to trust the results. Jared explained that the experiment results weren’t a black box because you could point to the SQL query to check for correctness if there were concerns. “People trust the results far more when you can say, ‘This is a Statsig result, and here is the query to check for correctness if you’re really concerned.’”

Jared also shared how their agility improved with Warehouse Native.

Jared Bauman

Watch this recording of our webinar on Warehouse Native experimentation in which Jared shares more insights:

Heading into Year 3

Todd shared that the road ahead for Whatnot involves continuing to improve operational efficiency, deepening their understanding of their North Star metrics, and making people cognizant of guardrails and broader contexts.

About Whatnot

Whatnot was founded in 2019 with the belief that shopping should be a fun and social experience. Today, Whatnot is North America and Europe’s largest livestream shopping platform to buy, sell, and discover the things you love. Millions have rediscovered the joy of shopping alongside a global community of people who share the same interests. Whatnot has helped thousands of sellers turn their hobby into a passion, their passion into a business, and make a living from what they love. Whether you’re looking for trading cards, comic books, fashion, beauty, or electronics, Whatnot has something and someone for everyone. Today, over 200 products are sold on Whatnot every minute. Discover more at www.whatnot.com or download the Whatnot app on Android or iOS.